Introduction

Meta unveiled the Llama Stack, a comprehensive suite of tools set to revolutionize the landscape of enterprise AI adoption at its Connect developer conference. This innovative stack represents a significant leap forward in making advanced AI capabilities more accessible and practical for businesses of all sizes to adopt the open-source Llama AI.

The Llama Stack introduces a standardized API for model customization and deployment, addressing one of the most pressing challenges in enterprise AI adoption: the complexity of integrating AI systems into existing IT infrastructures. By providing a unified interface for tasks such as fine-tuning, synthetic data generation, and building AI applications, Meta positions Llama Stack as a turnkey solution for organizations looking to leverage AI.

At its core, Llama Stack is designed to simplify the entire AI development lifecycle, from model training to deployment and management. This holistic approach is particularly significant for Enterprises who are grappling with the rapid pace of AI advancements and the need to implement these technologies across various business functions. By providing tools that facilitate the development of agentic AI systems – intelligent agents capable of performing complex tasks with minimal human intervention – Llama Stack opens up new possibilities for streamlining operations and enhancing decision-making processes.

As we delve deeper into the architecture and capabilities of Llama Stack, it becomes clear that Meta is not just offering another AI toolkit, but rather a comprehensive ecosystem that could reshape how businesses approach Llama-based open-source AI implementation. For IT & Business leaders, understanding the potential of AI stacks like this is crucial in staying ahead of the curve and leveraging AI to drive business growth and innovation.

Understanding Llama Stack

Llama Stack standardizes the building blocks needed to bring generative AI applications to market. At its essence, it is a comprehensive suite of tools and APIs designed to streamline the entire Gen AI lifecycle, from model development to production deployment. Llama Stack offers a solution to many of the challenges that have historically made enterprise AI adoption a complex and resource-intensive process.

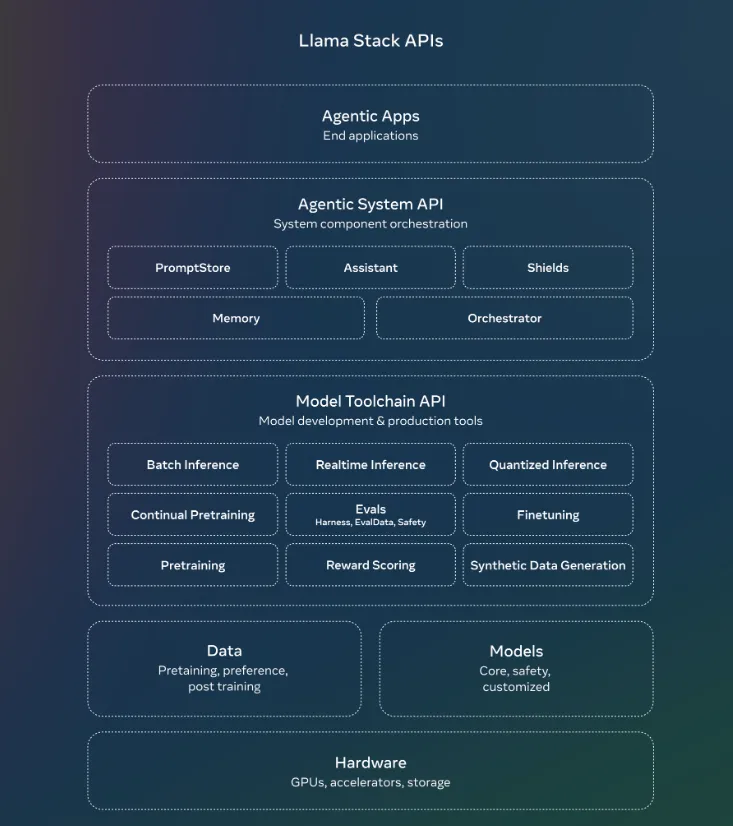

Layered Architecture

The power of Llama Stack lies in its layered architecture, which provides a logical and scalable approach to AI development:

- At the foundation is the Hardware Layer, which optimizes the use of GPUs, accelerators, and storage.

- The Data and Models Layer manages AI resources, including pre-trained models and datasets.

- The Model Toolchain API offers sophisticated tools for model development and production.

- The Agentic System API orchestrates AI components, enabling the creation of intelligent, autonomous systems.

- At the top, Agentic Apps represent the end applications that leverage the full power of Llama Stack.

This architecture allows for flexibility and scalability, crucial factors for businesses looking to grow their AI capabilities over time.

Addressing Developer Pain Points

Llama Stack tackles several key challenges that have traditionally hindered AI adoption:

- Complexity: By providing a unified interface, Llama Stack simplifies the process of model training, fine-tuning, and deployment.

- Resource Intensity: The stack’s efficient use of hardware resources helps reduce the computational costs associated with AI development.

- Integration: Standardized APIs make it easier to integrate AI capabilities into existing business systems and workflows.

- Scalability: The layered architecture supports scaling from small projects to enterprise-wide AI initiatives.

Enabling Agentic AI Systems

One of the most exciting aspects of Llama Stack is its support for agentic AI systems. These are AI applications capable of autonomous decision-making and action, potentially revolutionizing automation in various business functions. For instance, in Marketing, an agentic AI system could autonomously analyze customer data, create targeted campaigns, and adjust strategies based on real-time performance metrics.

By providing a comprehensive, standardized, and accessible platform for Gen AI development, Llama Stack is poised to accelerate the adoption of AI in businesses across various sectors. Its ability to simplify complex processes while enabling cutting-edge capabilities like agentic AI systems makes it a powerful tool for Enterprises looking to drive innovation and efficiency in their organizations.

The Architecture of Llama Stack

The Llama Stack’s architecture is designed to provide a comprehensive solution for AI development and deployment. Its layered approach allows for flexibility and scalability, making it suitable for a wide range of enterprise applications. Let’s explore each layer of the stack, from top to bottom, and understand how they contribute to enabling powerful AI solutions.

Agentic Apps: The Pinnacle of AI Applications

At the top of the Llama Stack are Agentic Apps – the end applications that leverage the full power of the underlying layers. These apps represent the cutting edge of AI capabilities, capable of autonomous decision-making and complex problem-solving.

In a business context, Agentic Apps could revolutionize various departments:

- Marketing: Autonomous campaign management systems that analyze market trends, customer behavior, and campaign performance to make real-time adjustments.

- Sales: Intelligent CRM systems that not only predict customer needs but proactively engage with leads and customize pitches.

- Finance: Automated financial analysts that can process vast amounts of data to make investment recommendations or detect fraudulent activities.

- HR: Advanced talent management systems that can handle everything from resume screening to personalized employee development plans.

Agentic System API: Orchestrating AI Components

The Agentic System API is the brain behind the Agentic Apps. It orchestrates various AI components to create cohesive, intelligent systems. Key components include:

- PromptStore: Manages and optimizes prompts for different AI tasks.

- Assistant: Provides conversational AI capabilities.

- Shields: Ensures safety and ethical constraints in AI operations.

- Memory: Enables context retention and learning over time.

- Orchestrator: Coordinates different AI components for complex tasks.

This layer is crucial for creating AI systems that can handle multi-step processes and adapt to changing conditions – essential for automating complex business workflows.

Model Toolchain API: The Engine of AI Development

The Model Toolchain API provides the core tools for AI model development and production. It includes:

- Batch, Realtime, and Quantized Inference: Enables efficient model deployment for various use cases.

- Continual Pretraining and Finetuning: Allows models to be updated and specialized for specific tasks.

- Evals, Reward Scoring, and Synthetic Data Generation: Ensures model quality and performance.

For Enterprises, this layer provides the flexibility to develop and deploy AI models that are tailored to their specific business needs, whether it’s analyzing customer sentiment in real-time or optimizing supply chain operations.

Data and Models Layer: The Foundation of AI Intelligence

This layer manages the essential resources for AI:

- Data: Includes pretraining data, preference data, and post-training data.

- Models: Encompasses core models, safety models, and customized models.

Effective management of this layer is crucial for maintaining high-quality, relevant AI capabilities. It allows businesses to leverage their proprietary data to create unique AI solutions that provide a competitive edge.

Hardware Layer: The Bedrock of AI Computation

At the foundation of the Llama Stack is the Hardware Layer, which includes GPUs, accelerators, and storage solutions. This layer ensures that the AI applications have the computational power they need to operate efficiently.

For enterprises, the flexibility of this layer means that Llama Stack can be deployed across various environments – from on-premises data centers to cloud infrastructures – allowing IT leaders to align their AI initiatives with their broader IT strategies.

The layered architecture of Llama Stack provides a comprehensive framework for AI development and deployment. By offering solutions at every level – from hardware optimization to end-user applications – it enables businesses to implement sophisticated AI solutions that can drive innovation and efficiency across multiple departments. For IT leaders, understanding this architecture is key to leveraging Llama Stack’s full potential in their organizations.

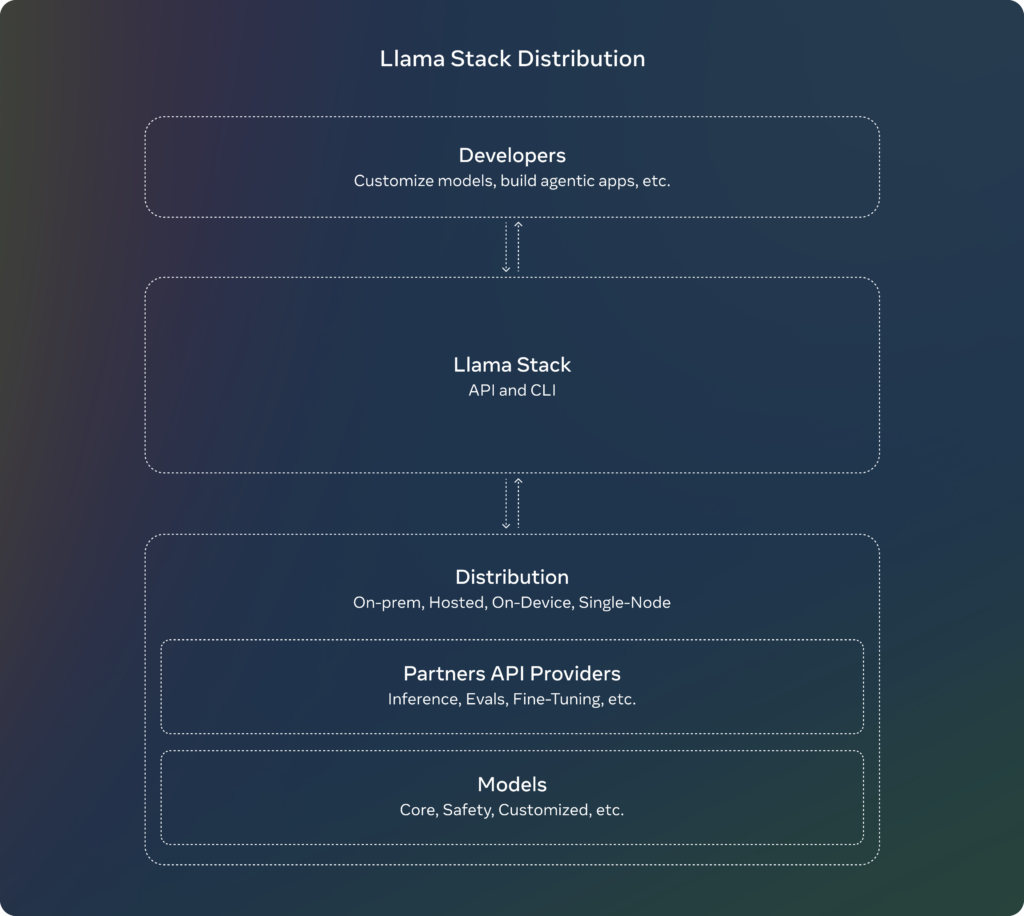

Llama Stack Distribution and Deployment

The flexibility of Llama Stack’s distribution and deployment options is one of its key strengths, allowing businesses to implement AI solutions in a way that best fits their existing infrastructure and needs.

Distribution Structure

Llama Stack’s distribution structure is designed to cater to a wide range of deployment scenarios:

- Single-node Llama Stack Distribution: Available through Meta’s internal implementation and Ollama, this option is ideal for smaller-scale deployments or testing environments.

- Cloud Llama Stack Distributions: Offered via partnerships with AWS, Databricks, Fireworks, and Together, this option provides scalability and flexibility for businesses already leveraging cloud infrastructure.

- On-device Llama Stack Distribution: Implemented on iOS via PyTorch ExecuTorch, this enables AI capabilities on mobile devices, opening up possibilities for edge computing and mobile-first AI applications.

- On-premises Llama Stack Distribution: Supported by Dell, this option caters to organizations with strict data security requirements or those preferring to keep their AI infrastructure in-house.

Developers and Customization

Llama Stack provides developers with the tools to customize models and build AI applications tailored to specific business needs. This customization capability is crucial for creating unique AI solutions that can provide a competitive edge in areas such as:

- Developing industry-specific chatbots for customer service

- Creating custom AI models for predictive maintenance in manufacturing

- Building specialized AI assistants for different roles within an organization

Llama Stack API and CLI

The Llama Stack API and Command Line Interface (CLI) serve as the core interaction layer for developers and IT professionals. They provide:

- Streamlined access to Llama Stack’s capabilities

- Efficient management of AI models and applications

- Integration possibilities with existing tools and workflows

Partners API Providers

Partnerships with API providers enhance Llama Stack’s capabilities, offering additional features such as:

- Advanced inference capabilities

- Specialized evaluation tools

- Fine-tuning options for specific use cases

These partnerships expand the ecosystem around Llama Stack, providing businesses with a wider range of tools and services to support their AI initiatives.

The Future of AI Development with Llama Stack

As we look ahead, Llama Stack is poised to play a significant role in shaping the future of open-source AI development and deployment in enterprise settings.

Potential Impact on AI Strategies

Llama Stack has the potential to democratize AI development, allowing businesses of all sizes to leverage advanced AI capabilities. This could lead to:

- More widespread adoption of AI across various industries

- Increased innovation in AI applications for business processes

- A shift towards more AI-driven decision-making in organizations

Addressing Challenges

As with any emerging technology, there are challenges that need to be addressed:

- Scalability: Ensuring that Llama Stack can handle the growing AI needs of businesses as they expand their use of the technology.

- Security: Maintaining robust security measures to protect sensitive data used in AI models and applications.

- Privacy: Addressing concerns about data privacy, especially in light of increasingly stringent regulations.

Llama Stack’s ongoing development will likely focus on these areas, providing IT leaders with more robust tools to manage these challenges.

Democratizing AI Development

One of the most significant impacts of Llama Stack could be its role in democratizing AI development. By providing a standardized, accessible platform for AI development, it could:

- Enable smaller businesses to compete with larger corporations in AI capabilities

- Foster a new wave of AI-driven startups and innovations

- Accelerate the integration of AI into everyday business operations

Conclusion

For Enterprises, Llama Stack represents a powerful tool in the journey toward AI-driven business transformation. Its comprehensive approach to AI development and deployment, coupled with its flexibility and scalability, makes it a compelling option for organizations looking to leverage AI across their operations.

As AI continues to evolve and become more integral to business success, platforms like Llama Stack will play a crucial role in enabling organizations to stay competitive. By providing the tools to create sophisticated AI applications, from intelligent automation in HR to predictive analytics in finance, Llama Stack is helping to shape a future where AI is not just a buzzword, but a fundamental part of how businesses operate and innovate.

The time is ripe for Entperises to explore the capabilities of Llama Stack and consider how it can be integrated into their AI strategies. By doing so, they can position their organizations at the forefront of the AI revolution, ready to harness its power to drive growth, efficiency, and innovation in the years to come.