Artificial intelligence has come a long way, but there’s a new frontier that promises to revolutionize how AI agents think and perform. It’s not just about crafting better prompts anymore; the future lies in what’s being called context engineering. This emerging discipline transforms AI from a simple question-answering tool into a sophisticated system with a “memory,” the ability to process vast information, and the power to generate truly intelligent responses.

In this article, we’ll explore the core ideas behind context engineering, its three essential skills, and how mastering these can unlock powerful new AI capabilities. We’ll also examine the biggest challenge AI still faces and why the role of the human “master chef” or context engineer is more important than ever.

Table of Contents

- From Hallucinations to a Smarter AI Diet

- Beyond Prompt Engineering: The Rise of Context Engineering

- The Three Core Skills of Context Engineering

- What Happens When You Master Context Engineering?

- The Fundamental Challenge: The Comprehension-Generation Gap

- The Next Frontier: Who Will Teach the AI Agents?

- FAQ

From Hallucinations to a Smarter AI Diet

We’ve all experienced it: you ask an AI a straightforward question, and instead of a helpful answer, you get something bizarre or nonsensical—but delivered with absolute confidence. Researchers call these mistakes hallucinations, and they are one of the most frustrating aspects of interacting with AI today.

But what if the problem isn’t that the AI is “dumb,” but rather that it’s been poorly fed? The quality of an AI’s output depends heavily on the quality of the information and context it receives. It’s the classic principle of “garbage in, garbage out.” Instead of focusing on making the AI’s brain bigger, the secret to smarter AI agents is putting them on a much better diet of information.

Beyond Prompt Engineering: The Rise of Context Engineering

Most people have heard of prompt engineering—the craft of writing perfect prompts to coax better answers from AI. But that’s just the tip of the iceberg. The whole field is shifting towards something much bigger: treating AI as a system you must build and manage holistically. This is context engineering.

Think of prompt engineering like walking into a kitchen and telling the chef, “Bake at 350 degrees.” Useful, but limited. Context engineering is like being the master chef who runs the entire kitchen—sourcing the best ingredients, prepping them perfectly, managing the pantry, and orchestrating every detail to create an amazing meal.

This isn’t just a metaphor. A thorough review of over 1,400 studies formally defines context engineering as the science of optimizing information payloads—all the data, instructions, and context fed into large language models to extract the best possible performance.

The Three Core Skills of Context Engineering

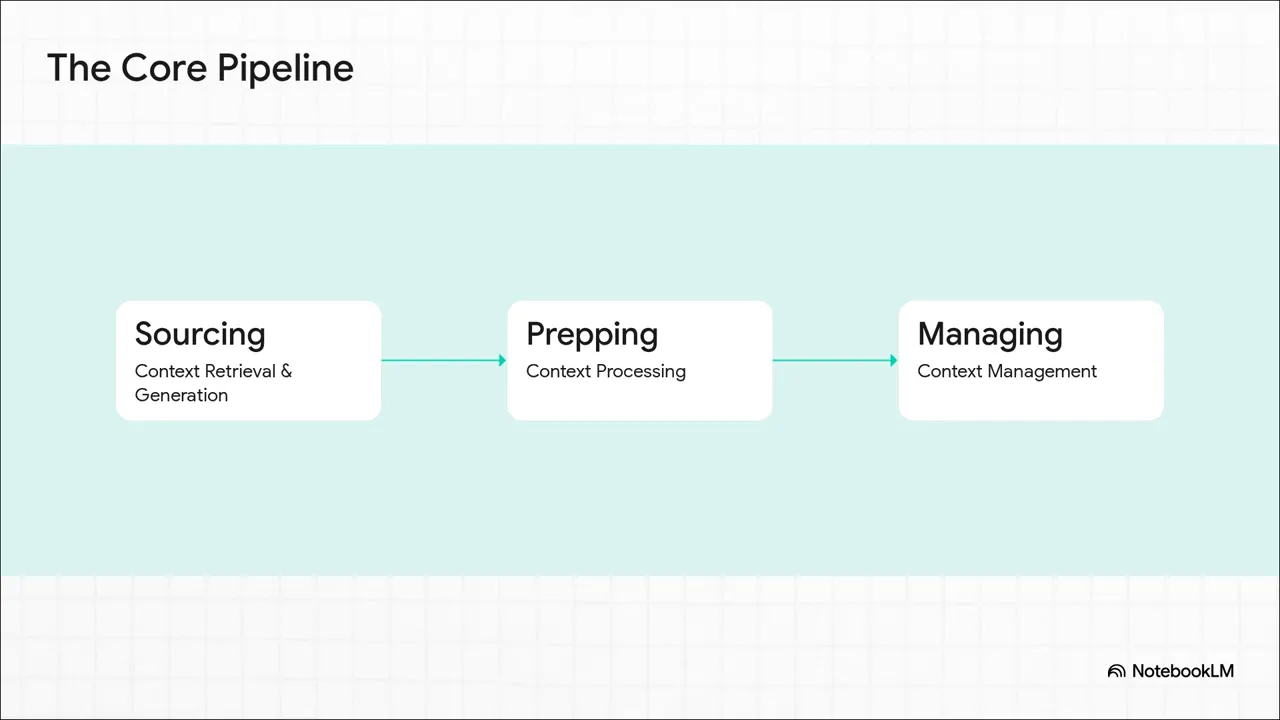

Running an AI’s kitchen comes down to three core skills that form a clear pipeline for truly intelligent answers:

- Sourcing the Ingredients: Gathering the right, relevant information beyond just the prompt.

- Prepping the Ingredients: Processing and refining raw data to make it usable.

- Managing the Pantry: Organizing and storing information efficiently for future use.

Sourcing the Ingredients: Context Retrieval and Generation

Sourcing is much more than typing a query. Advanced techniques like Retrieval Augmented Generation (RAG) empower AI agents to reach out and grab fresh, live information—whether it’s today’s news or data from a structured knowledge graph. Think of a knowledge graph as a family tree for ideas, mapping out how concepts connect.

Prepping the Ingredients: Context Processing

Once the data is sourced, it needs to be prepped. This means handling massive volumes of text—imagine digesting a 100-page report effortlessly. This skill is called long context processing. Even better, AI agents can self-improve through self-refinement, where the AI critiques its output and iterates to deliver better answers without human intervention.

Managing the Pantry: Context Management

Finally, a well-organized pantry is essential. For AI, this translates to context management, which provides memory. Using memory hierarchies, AI remembers previous conversations and topics. Techniques like context compression—think vacuum-sealing food—allow the AI to store more information efficiently within its limited working memory.

What Happens When You Master Context Engineering?

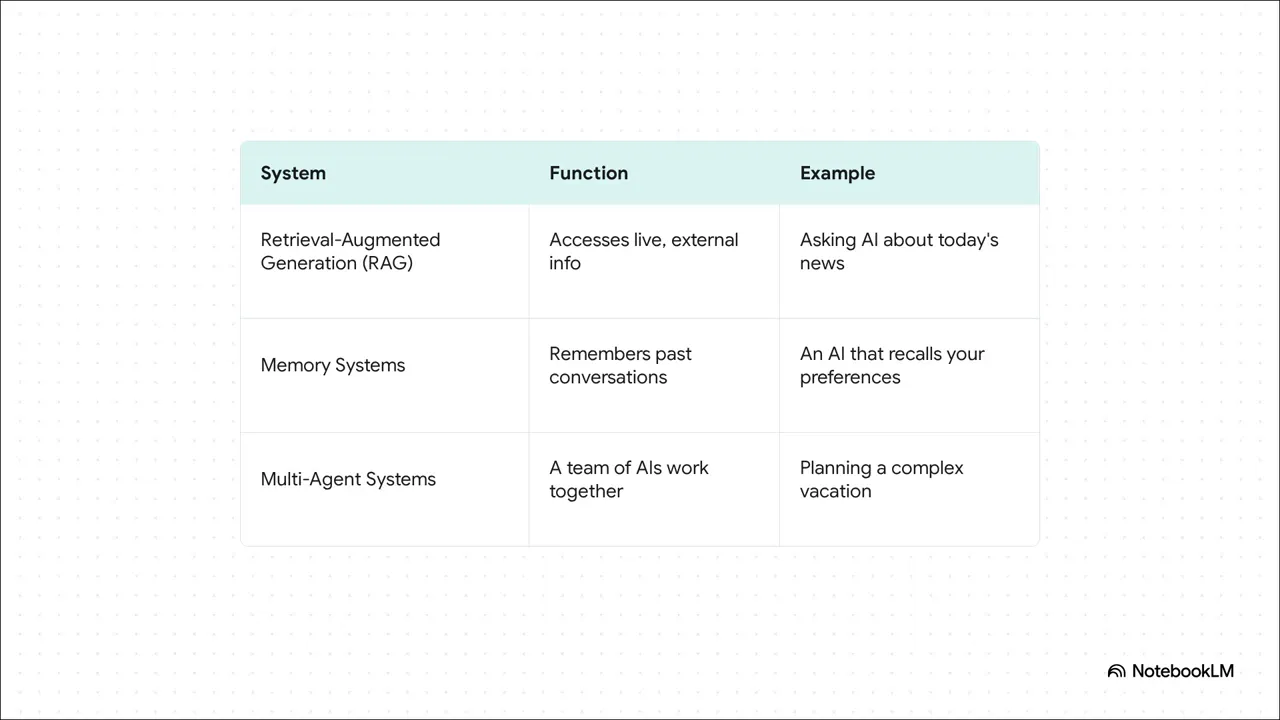

When you combine sourcing, prepping, and managing context effectively, you don’t just get better answers; you unlock entirely new AI capabilities usable in the real world:

- Live Updates: AI agents using RAG can provide real-time news and information.

- Personal Assistants with Memory: AI that truly remembers your preferences and past interactions.

- Multi-Agent Systems: Teams of specialized AI agents working together—for example, planning your entire vacation with agents booking flights, hotels, and restaurants.

The Fundamental Challenge: The Comprehension-Generation Gap

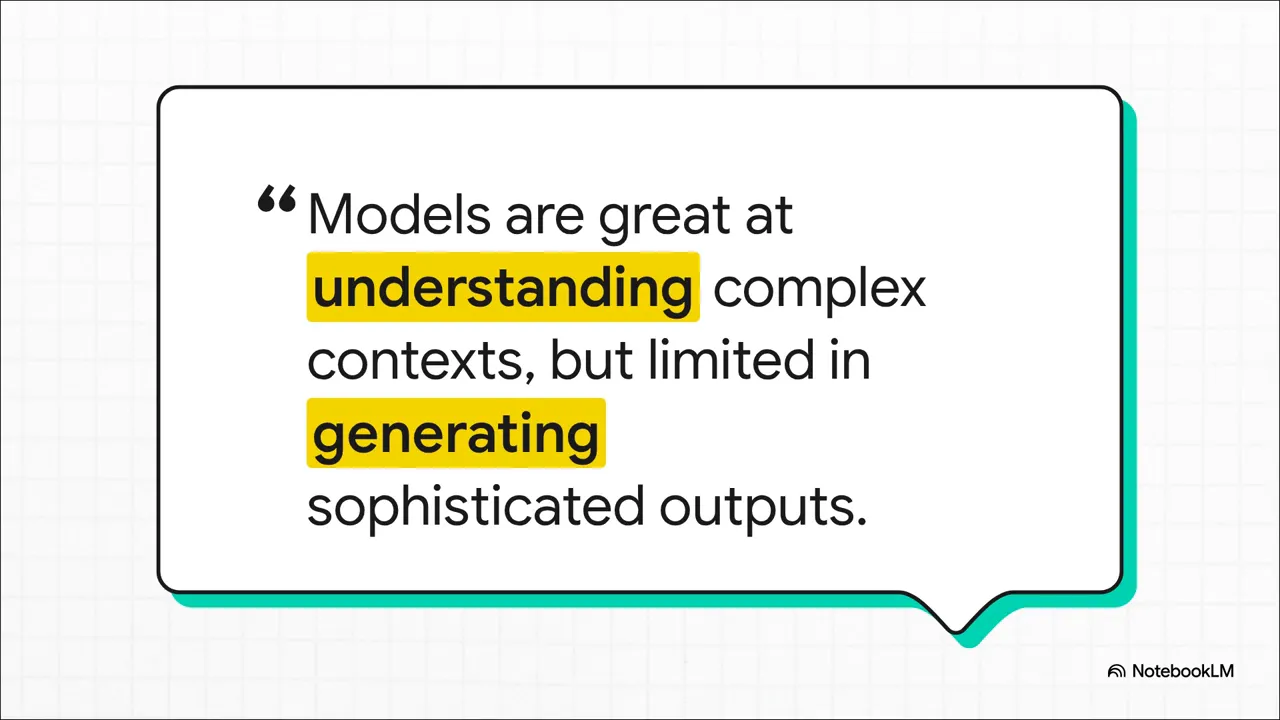

Despite these advances, there’s a critical gap that context engineering hasn’t yet solved. According to research, AI agents have become incredible students—they can read and understand massive, complex information with impressive skill. But when it comes to being authors—creating original, long, and coherent content like detailed reports or novels—they still struggle.

“They can read the textbook, but they can’t write a new one. Not yet.”

This is known as the comprehension generation gap. AI agents lose coherence and the thread of ideas in longer, original creations, limiting their ability to generate sophisticated new content.

The Next Frontier: Who Will Teach the AI Agents?

This gap leads to a fascinating question: as AI agents become better learners, who will be the master chefs, the context engineers, teaching them not only to understand the world but also to create new, meaningful content within it?

Context engineering marks a significant leap forward, but human expertise will continue to be essential in guiding AI agents toward their full creative potential. The journey to smarter AI is just beginning, and the role of the context engineer is more vital than ever.

Thanks for diving into this exciting new frontier with me.

FAQ

What is context engineering in AI?

Context engineering is the discipline of optimizing all the data, instructions, and contextual information fed into AI models to maximize their performance. It goes beyond prompt engineering to treat AI as a system that requires careful sourcing, processing, and management of information.

How does context engineering improve AI agents?

By mastering sourcing relevant information, prepping it for use, and managing memory efficiently, context engineering enables AI agents to provide smarter, more accurate, and context-aware responses, unlocking new capabilities like live data retrieval and persistent memory.

What are AI agents?

AI agents are autonomous or semi-autonomous systems powered by artificial intelligence that can perform tasks, make decisions, and interact with data and users. When empowered with context engineering, these agents become more intelligent, flexible, and capable.

What is the comprehension generation gap?

This gap refers to the current limitation where AI agents can understand and process large amounts of information well, but struggle to generate long, coherent, original content like detailed reports or novels.

Why is human involvement still important in AI development?

Humans are essential as context engineers or “master chefs” who teach AI agents how to effectively understand and create meaningful content. Despite advances, AI still needs guidance to bridge the comprehension generation gap and reach its full potential.

Level up with AI! |

|

Check out our AI Mastermind |

| Click me |

Check out this video: Context Engineering