While process automation is all the rage, the understated hero of all this has been the humble processes themselves. Processes and their continuous improvement have been the bedrock for the evolution of industries and the increase in affluence.

It is an interesting story of how processes came to be, and how we have continuously improved them. We started with different ad-hoc techniques. These led to many formal techniques like Six-sigma. We then built on these techniques with the availability of computer data. And now, AI is helping us take another big leap to make the processes autonomous.

It has been quite a journey for the humble process. Let us dive in!

Birth Of Processes And Early Improvements

Have you ever wondered how the business processes of today came to be?

Well, it all started with the concept of “Division of labor” introduced by Adam Smith in 1776. Talk of one idea changing the world! His idea was that you could improve output and decrease costs by breaking down a job into tiny parts. These “tiny” components of work lead to specialized labor. That in turn led to the creation of business functions and early processes that we see today.

In 1911, Frederick Winslow Taylor went further. He captured the time taken for individual tasks with a stopwatch. Companies at that time used this to cut all unnecessary tasks and standardize the processes. Taylor also helped organizations come up with best practices for work. He introduced a structured division of management and employees. As you can see, he was our first management consultant! 🙂

In 1913, Henry Ford applied Taylor’s ideas to develop the first moving assembly line. This led to a huge transformation in work and society. The assembly line helped Ford reduce the time it took to build a car from more than 12 hours to 93 minutes!

These early process improvements largely originated in the West. The next phase of process improvement started in the East.

Formal Process Improvement Methods

While the US and other nations helped Japan recover after the war, they brought in experts like Dr. W. Edwards Deming to introduce process improvement. Building on these concepts, Japanese manufacturers took process efficiency to the next level.

This led to the development of many new and formal techniques for process improvement. Here is a quick introduction to a few of the prominent ones:

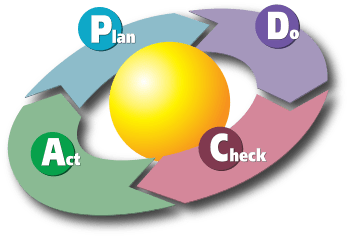

PDCA

Deming introduced this technique to the Japanese industry in the 1950s. PDCA stands for Plan-Do-Check-Act. It is an iterative process control and improvement technique.

As you can infer, it is a structured method wherein you:

- First, establish business objectives and processes to deliver results (Plan).

- Carry out the plan (Do)

- Check data and results for effectiveness (Check)

- Finally, improve the process based on the data (Act or Adjust)

- Continue the loop

Deming’s work in Japan laid the foundations for much of the process improvement work there. Next, let’s look at a popular technique from Toyota.

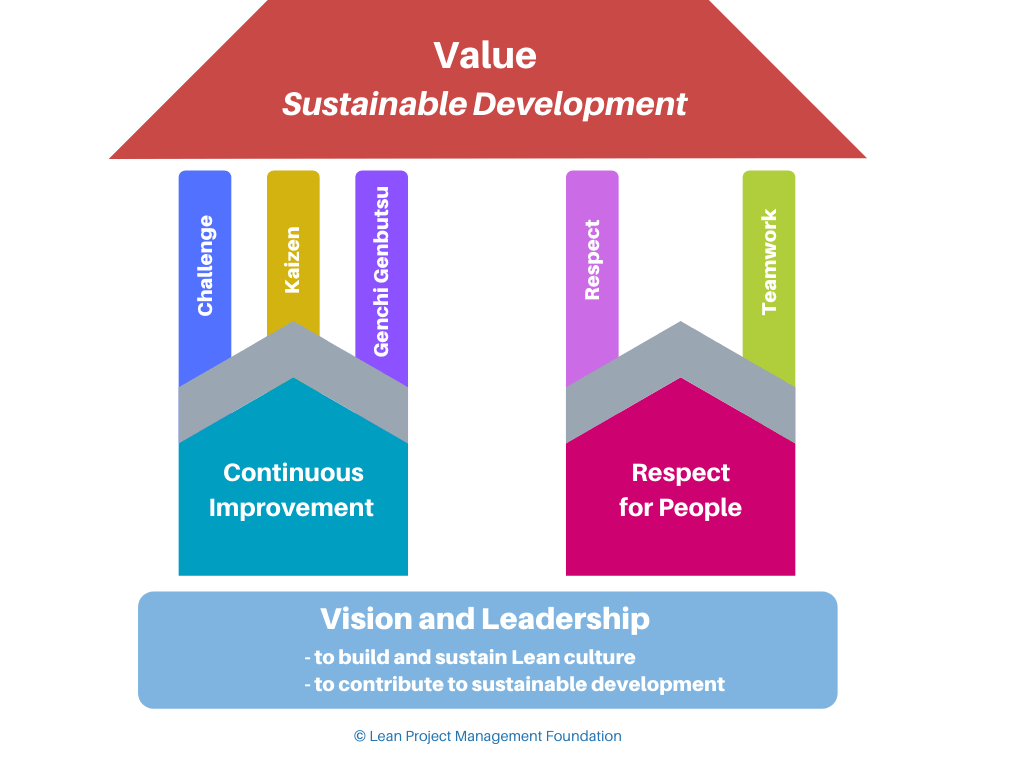

Lean

Lean is a set of minimalist philosophies developed at Toyota in the 1960s. It is a customer-centric approach to process improvement by eliminating waste. It uses PDCA to identify and drop non-value-adding activities (“waste”) from the processes.

“Lean” is a rebranded version of the Toyota Production System for the Western audience. In the 90s, this led to a wave of “process reengineering” efforts within organizations. Here are the pillars for Lean:

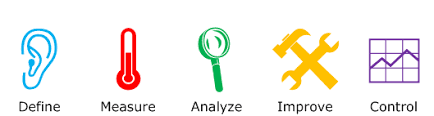

Six Sigma – DMAIC

Six Sigma is another popular process method developed in the US at Motorola in the late 1980s. It focuses on finding and removing the causes and defects in a process. The goal is to ensure error-free performance.

Six Sigma uses a structured method called DMAIC for process improvement. It is an acronym for:

- Define: Define the problem.

- Measure: Quantify the problem

- Analyze: Identify the cause

- Improve: Solve root cause and verify improvement

- Control: Monitor and keep improving

It is a data-driven cycle to improve, optimize and stabilize business processes.

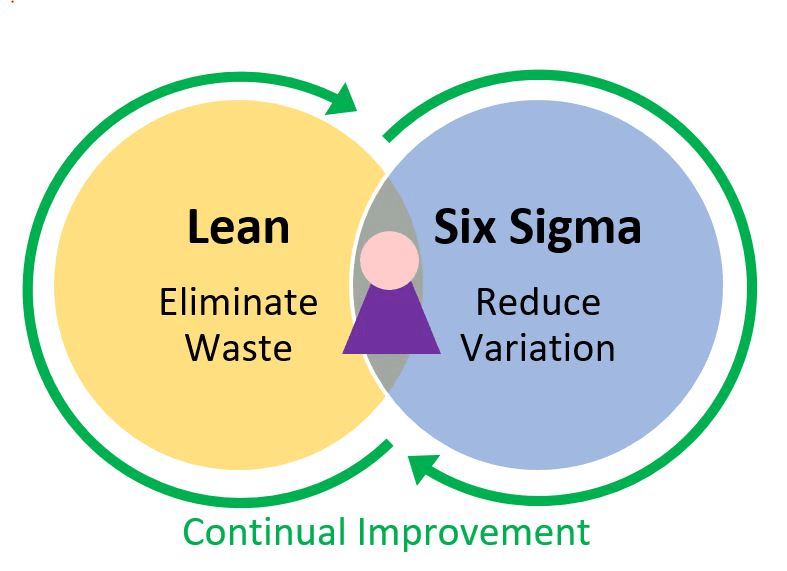

Lean Six Sigma

As the name indicates, this method combines the best of Lean and Six Sigma. It is a systematic method to remove waste and reduce process variation. It helps reduce process defects and waste and provides a framework for improvement.

So, those are some prominent process improvement methodologies. Most of them originated and are still largely used to improve manufacturing.

With the advent of the information age, most of the work moved to computers. These computer systems produce enough data that we can use to build on the above techniques.

So, we started adding techniques that can use system data for process improvement. One such initial method was Process mining.

Process Mining – Process Improvement With Data

Process mining started in the late 90s based on research at IBM. The interest in the area was quite low and was mostly an academic topic.

But some researchers like Wil van der Alst persisted. He realized that the existing process understanding methods were quite limited. He knew that event data from systems could help us understand the processes better.

He disseminated this information through his book “Process Mining: Data Science in Action“. Later he also released a course of the same name on Coursera. These both led to a wider understanding and interest in the topic.

In 2011, Celonis was founded and set out to bring more “data-driven rigor ” and use computer log data to map out processes. They helped bring Process mining to the organizations leveraging their close relationship with SAP.

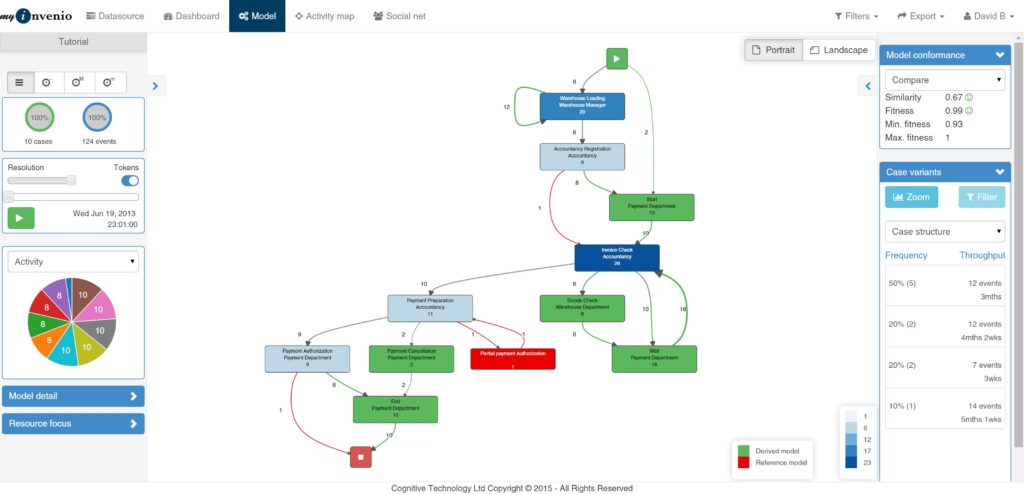

Process mining uses data from computer logs to provide a visual representation of processes. It then highlights the variations and possible inefficiencies.

With process mining, we had the first practical method to use data-centric analysis for process improvement. This would soon become a key enabler for process automation.

Process Improvement Meets Automation

Around the 2010s, there was another trend taking off – the automation of computer-based work.

Companies like Blue Prism and UiPath were using “Software bots” to automate tasks. These Robotic Process Automation (RPA) tools were good at automating repetitive tasks. So many organizations embraced it to improve efficiencies.

Organizations soon realized that process mining could help identify processes ripe for automation. For instance, process mining could uncover processes with few variations but large volumes. And so, process mining and related techniques along with RPA have become a popular route for process improvement.

RPA mimics the actions people perform at the computer using the screens (UI). Process mining uses back-end event logs and databases. So, it is missing the UI information that can help with RPA.

Out of this need, companies came up with a way to mine the desktop screen data for process understanding. This has led to screen-based process understanding and recording techniques.

Task Mining and Task recorder – Screen-based Process discovery

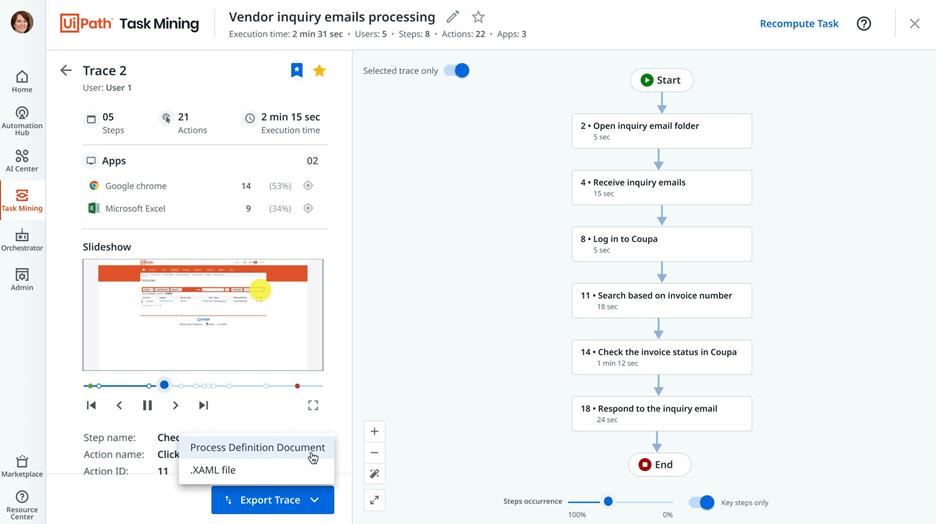

Task Mining records the interactions performed on the computer screen. It then uses computer vision (a form of AI) to analyze and understand the performed tasks. It analyzes actions on the desktop and provides a view of the front-end work. This is a different view compared to Event logs and back-end databases used by process mining.

RPA uses screen or UI elements for automation and so Task mining data and analysis comes in handy. Task mining along with AI can also suggest the next best steps to perform a task.

There are privacy concerns with Task mining though. This is because it records actions performed on the desktop. These tools address this by allowing people to limit applications or actions recorded.

Still, this is a sticking point. So there is another approach called Task recording or Task capture. With these types of tools, people open and record the task steps. Here, people have more control and only capture the actions when they want to. This is usually used to produce documentation for the process flows and not for AI analysis.

Still, Application logs and Screen recordings by themselves are usually not enough. It does not paint the entire picture of what happens across applications and time. So tracking processes are getting a more AI-based data-rich approach.

Process Intelligence With AI – Going Beyond Mining

As we saw, both Process and Task mining look at different types of data. They then use the data and apply AI to give us different perspectives of the process.

There is a different way that sort of combines these perspectives. It aims to give us a unified view of the process. Logically, that would mean creating a view by combining the back-end and front-end data.

But it turns out, that the best way to provide this view is to capture desktop interactions at scale and at a deeper level. This means capturing data across workstations and at different time periods. This provides a massive dataset for AI analysis. The data is also captured from the desktop operating system providing a deeper level of data.

Advanced AI-ML techniques are then applied to this rich data to deduce the processes. The resulting AI-generated visualization gives us a richer end-to-end view of the processes. The AI can also continue to check the process and give us continuous feedback to improve.

Moving To Autonomous Processes With AI

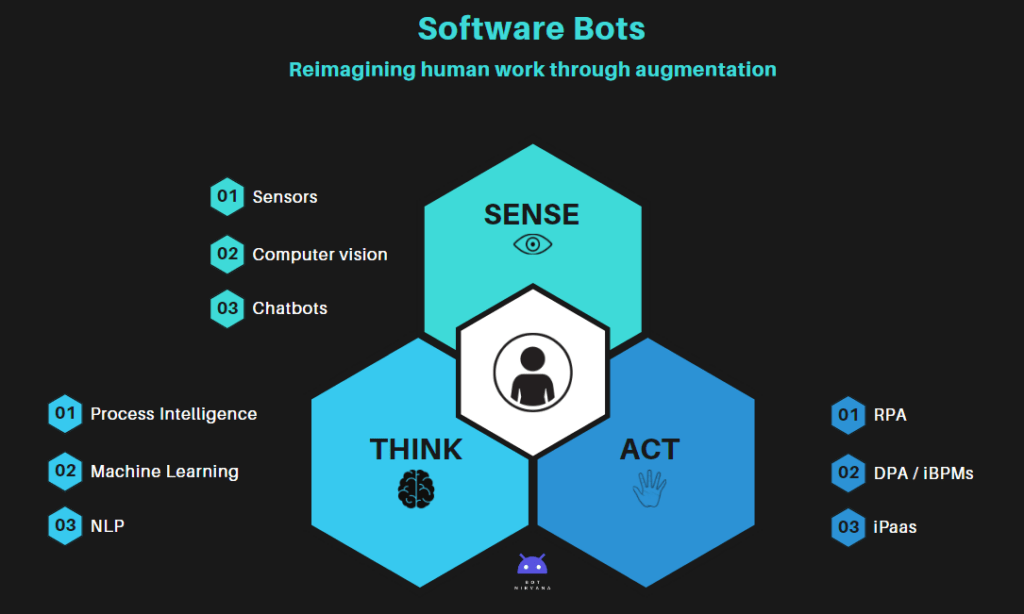

We are moving toward an autonomous Sense-Think-Act “Robotic paradigm” for computer-based work.

With AI, we can continuously monitor our processes (sense) and decide on the best possible next action (Think). These actions can then be suggested to a human or executed autonomously.

This could eventually mean that many processes would completely disappear! This has started to happen and we can expect more and more processes to become autonomous.

The caveat though is that we are able to address the privacy and ethical concerns that we have with AI. Either way, the humble processes have come a long way. We have advanced from manual processes to autonomous processes.

In a way, we may be reaching the end game for processes as many of them disappear completely and become autonomous!

Before we go, here are the key takeaways:

- Processes originated with the concept of “Division of labor” introduced by Adam Smith in 1776.

- Formal process improvement techniques like Six-sigma, PDCA, Lean, etc. brought rigor to process improvement.

- With computers, we started using computer event data to improve processes. This is called Process mining.

- Process mining has been helping us automate with RPA. This led to screen-based capture techniques like Task mining.

- AI-based process understanding techniques are starting to give a richer end-to-end view of the processes.

- We are moving toward an autonomous Sense-Think-Act “Robotic paradigm” for computer-based work.

We will be diving deeper into the different data-based process discovery and improvement techniques in future posts. So stay tuned!